SERP to LLMs: What's Next for 2026?

An expert analysis of the foundational shift from the traditional Search Engine Results Page (SERP) to Large Language Model (LLM) powered answers. See the specific changes in content consumption and brand discovery expected in the coming year.

TL;DR

- Search is shifting from single-surface SERPs to a multi-surface ecosystem spanning AI assistants and social Q&A; visibility now requires winning on both pages and answers.

- As models reshape discovery, understanding LLMs meaning and leaning into AI search engine optimization helps your content get cited in AI overviews and chat responses.

- Modern AI ranking isn’t just about positions; it’s about presence, attribution, and whether assistants reference your brand in synthesized answers.

- To operationalize AI for SEO, use emerging tools. For example, look for “the best tools for converting SERP data to LLMs,” “best affordable SERP to LLM tools for startups,” and “top-rated platforms for converting search results into AI prompts”.

- The winning formula for 2026: Answer-first content, clear evidence, tight schema, fast performance, and placements on sources LLMs already trust.

LLMs Meaning in 2026: Why Search Is Moving Beyond Classic SERPs

Search isn’t a single doorway anymore. It’s a neighborhood; busy, lively, and changing rapidly. People still “Google it,” but they also ask AI assistants, browse social Q&A, and make decisions without ever clicking a blue link. That’s your opportunity. Treat search and AI as one integrated system and build for both today. Here’s the simple truth heading into 2026: the focus is shifting from pages to answers. This doesn’t eliminate SEO; it expands the playing field. To keep your brand visible and trusted, you’ll need a plan that covers both traditional SERPs and AI answers, is measurable and repeatable, and has no fluff. Along the way, we’ll clarify LLMs meaning for marketers, the emerging signals shaping AI search engine optimization, why AI ranking now goes beyond positions, and how to implement AI for SEO efficiently without creating busywork.

Why 2026 Is the Tipping Point

Generative AI has become mainstream. By August 2025, 55% of U.S. adults reported using AI tools; up from roughly 44% a year earlier (Federal Reserve Bank of St. Louis). Pew Research found that 34% of Americans had tried ChatGPT by mid-2025, with younger adults leading adoption (Pew Research Center). Meanwhile, traditional search remains strong: SERPs continue to anchor most early-stage research, with 95% of Americans still using search engines monthly, and “heavy” Google usage increased from 84% (2023) to 87% (2025), even as AI chat usage grew (Search Engine Land).

The key takeaway is behavior fragmentation. People switch between SERPs, AI overviews, chat assistants, and social Q&A during the same journey. SERPs still play a vital role in orientation, even as assistants summarize more of the process. Understanding LLMs meaning within that journey matters: Language models synthesize trustworthy sources into answers, often before users see any traditional results. That’s where smart AI search engine optimization shines: Content that’s explicit, attributable, and structured enough for assistants to cite.

Practically, you’ll need to allocate resources to both surfaces. It’s not “SEO versus AI”; it's one integrated discipline. Expect your AI ranking story to include whether Gemini, ChatGPT, or Bing Copilot cite you in their final answers. And when you properly orchestrate data, structure, and authority, AI for SEO enhances your presence across both screens and speakers.

AI Answers Are Reshaping Clicks and Visibility

AI answers reduce organic clicks and pull attention upward, into summaries. Google’s AI Overviews appear in roughly 47% of search results and can take up nearly half the screen on mobile (Search Engine Journal). After their rollout, the #1 organic result’s click‑through rate dropped from 28% to 19% (a 32% decline), with the #2 result falling 39% (Search Engine Journal). Pew reports that 58% of users encountered at least one AI‑generated summary within a month (Pew Research Center). This shift forces marketers to rethink how SERPs function, not as the final destination but as one surface among several competing for the user’s first glance.

That makes “position = success” incomplete. For a modern AI ranking view, include answer presence and citations, not just SERP positions. If you’re pursuing AI search engine optimization, write for the answer box: Ask the question, give the complete answer, cite your sources, and make it scannable. Think in modules: A crisp summary, a step‑by‑step, a proof point. Those blocks translate well into AI overviews and assistants, which is practical AI for SEO. And because LLMs meaning centers on synthesis (not keyword matching), sources that clearly state facts and back them with evidence are most likely to be reused.

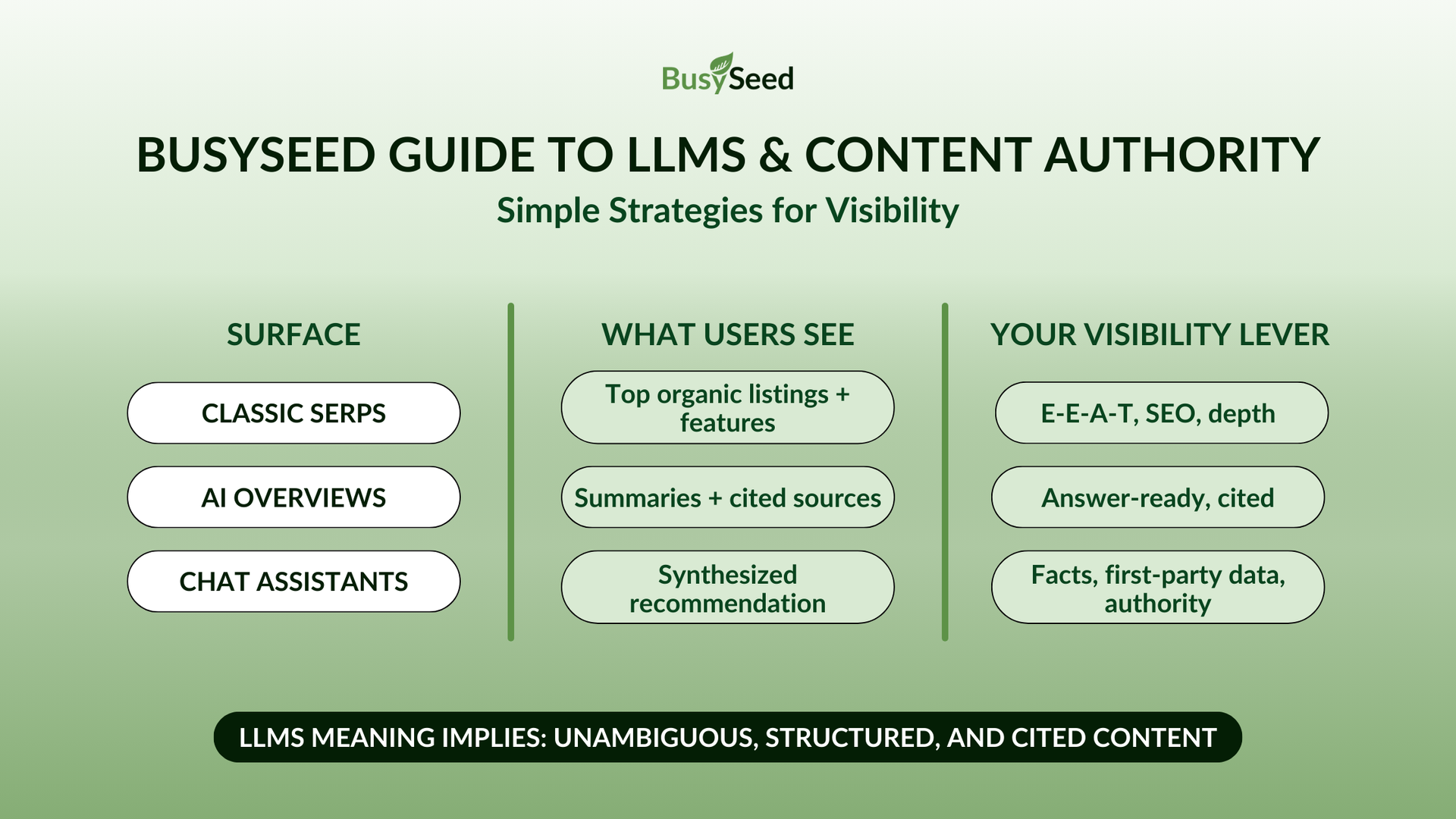

At-a-glance: Where attention is moving

| Surface | What Users See First | Your Visibility Lever |

|---|---|---|

| Classic SERPs | Top organic listings and features | E‑E‑A‑T, technical SEO, topic depth |

| AI Overviews | Summaries with cited sources | Answer‑ready content and citations |

| Chat assistants | One synthesized recommendation | Clear facts, first‑party data, authority |

What LLMs Meaning Implies for Your Content

Models read, summarize, and attribute. They don’t just match strings. The most practical translation of LLMs meaning for content leaders: Make your pages unambiguous, structured, and easily quotable. Research summarized by Search Engine Journal shows AI Overviews skew toward longer, explanatory queries and include exact keywords only about 5% of the time (Search Engine Journal). And because SERPs now combine traditional snippets with AI-generated summaries, your content must seamlessly cater to both formats. That’s your cue to cover the entire topic on a single page: the “why,” the “how,” the “what if,” along with references.

In practice, this is modern AI search engine optimization: Write conversationally, start with the question, and offer specific answers that an assistant can copy word-for-word. Include diagrams, checklists, and evidence. Build a comprehensive FAQ that anticipates related questions; these are valuable for both assistants and humans alike. Successfully doing this consistently is the core of sustainable AI for SEO.

How to Optimize for “Search Everywhere” in 2026

Users bounce between Google, AI assistants, and social search. Your playbook should, too. Marketers increasingly treat ChatGPT as a search channel and cite TikTok as a discovery platform (Forbes). Meanwhile, SERPs remain the grounding layer where users compare options or validate what assistants tell them. That’s the moment to unify AI search engine optimization with social Q&A and classic SEO, so your brand shows up wherever answers are formed.

- Foundation: Indexable, fast pages with clean schema; robust internal linking; crystal‑clear authorship and sourcing.

- Answer‑readiness: Question‑led H2s, concise summaries, step‑by‑step sections, and visible data/citations.

- Social resonance: Short explainers and Q&A snippets that map cleanly into assistant‑style answers; fuel for AI for SEO.

Measure what matters. Your AI ranking isn’t just a position number; it’s also its presence in AI overviews and chat responses. Keep a dynamic list of priority questions and test them in ChatGPT, Gemini, and Copilot. The LLMs meaning for operations is simple: Trusted, structured, and fast content wins; ambiguous, slow, or unclear content gets sidelined. If you want a team that already works across these layers, explore how BusySeed approaches integrated growth and AI visibility. We’re ready when you are!

Authority Signals That Earn Citations Inside AI Answers

Assistants value trust and clarity. Studies show Google’s AI Overviews skew toward authoritative sites, especially in health and tech (Search Engine Journal). That aligns with the core of AI search engine optimization: Demonstrate expertise, mark it up correctly, and make proofs obvious.

- Schema depth: Organization, Article, FAQ, HowTo, Product, and Review, kept pristine and consistent.

- Expertise on the page: Author bios with credentials; links to original research; transparent methods.

- First‑party data: Unique stats, studies, or frameworks; power multipliers for AI for SEO (AF6) and AI ranking (AR5).

- Third‑party validation: Appear on publications that AI already favors; Search Engine Land outlines why cross‑platform authority compounds (Search Engine Land).

It comes back to LLMs meaning: Models assemble answers from sources they can trust and cite. Once you’re repeatedly selected for one topic, that trust ripples into adjacent queries.

Target the Sources LLMs Already Trust

Don’t spray and pray. Map the publications, guides, and datasets that consistently appear in AI answers for your category, then concentrate your outreach there. Experts recommend prioritizing review sites, standards bodies, and industry explainers that assistants often reference (Search Engine Land). In many B2B verticals, a small set of brands captures a disproportionate share of AI mentions (Search Engine Journal).

This is your strategic lens on AI ranking: Get named where the conversation is already happening. Fold those targets into your AI search engine optimization sprints. Treat each win as a signal that boosts AI for SEO momentum. And keep the LLMS meaning principle front and center: Be explicit, citeable, and attributable.

How to Measure AI Visibility While Reporting Matures

There’s no universal “AI rank tracker” yet, but there are solid proxies. Search Engine Land recommends blending directional metrics until platform reporting improves (Search Engine Land):

- Detectable referrals from AI platforms and assistants.

- Branded search and direct traffic lifts that correlate with AI usage in your category.

- Answer presence sampling in ChatGPT, Gemini, and Copilot for your priority questions.

Teams often pair this with the top-rated platforms for converting search results to AI prompts, which streamline how sampled questions translate into measurable insights. That mix lets you benchmark AI ranking without perfect transparency. Tie experiments to outcomes, launch a structured FAQ, then look for branded search upticks. As you iterate, document your AI for SEO changes and keep AI search engine optimization metrics next to traditional KPIs. For analytics teams, the LLMS meaning shift is humbling but healthy: Assistants influence decisions that may never create a click. Align your models to that reality.

Technical Foundations That Help LLMs Fetch and Trust Your Site

Speed, uptime, and clean markup aren’t “nice to have.” They’re the cost of entry. A large tech firm saw a 1,200% spike in assistant referrals when users began asking AI for recommendations, and brands with stronger performance suffered fewer traffic losses as AI features expanded (Forbes). Performance is now inseparable from AI search engine optimization, because assistants prioritize pages they can fetch, parse, and attribute quickly. In other words, engineers and SEOs are on the same team now: If your content can’t be fetched and parsed fast, you won’t make the answer, hurting your AI ranking, your AI for SEO returns, and your AI search engine optimization story.

Minimum viable readiness checklist:

- Sub‑second TTFB on key templates; strong Core Web Vitals across device classes.

- Edge caching and robust uptime; avoid fragile client‑side rendering for primary content.

- Clean, consistent schema across entities; zero structured data errors.

- Stable URL patterns, canonical discipline, and prioritized sitemaps.

- Accessible, semantic HTML so both users and parsers succeed, true to LLMs meaning in production.

Local and E‑commerce: Competing as AI Flattens the Field

AI can level or tilt the playing field, depending on your readiness. Analyses indicate that AI results often surface neutral guides or Q&As where credible local players can break through (Search Engine Journal). If you’re scrappy, cement local authority, precise hours, parking info, neighborhood context, and well‑structured reviews. If you’re established, double down on brand cues and consistency so assistants confidently recommend you.

In retail and CPG, assistants increasingly shortlist products before users ever click. That makes pristine product schema, review markup, and inventory signals essential. Buying guides that state criteria transparently tend to be cited. Win those, and your AI ranking improves without necessarily chasing more links. Pair that with pragmatic AI for SEO execution. For teams wanting a guided path, BusySeed can help you roll this out without bloat. Start a conversation!

Tools That Move You From SERP Data to LLM‑Ready Insights

Your stack probably combines SERP capture, answer‑presence sampling, content modeling, and schema validation. Teams exploring the best tools for converting SERP data to LLMs can use this workflow as a baseline for evaluating what belongs in their stack. Since SERPs still provide the clearest structured view of demand, combining them with AI-answer sampling gives teams a comprehensive view. There’s no “perfect” platform yet, so start light and iterate:

- Capture SERP + AI features: Crawlers that detect AI boxes and shifts in snippet composition.

- Coverage graders: Tools that map question clusters to your content gaps and evidence needs.

- Schema validators: Guardrails that keep markup clean and consistent across templates.

Use the tools you have, stitch in a few targeted capabilities, and cycle outputs into your editorial calendar and engineering backlog. As your visibility grows, your reporting will get sharper. When you’re ready to operationalize across teams, BusySeed can help align stakeholders and define the right milestones. This is where your team’s AI ranking evolution becomes visible to the business.

Teams and KPIs: Leading the Next Era

Leaders are expanding KPIs from rankings alone to “presence in AI features” and answer share‑of‑voice (Forbes). Many also admit they’re underprepared to measure AI search. That’s okay, it’s evolving. Focus on building cross‑functional rhythms that unite editorial, analytics, and engineering:

- Editorial: Explicit Q&A segments, short answers, long‑form evidence pages.

- Analytics: Branded search lifts, assistant referrals, and sampled AI answer presence.

- Engineering: Performance, schema, crawlability, treated as revenue‑critical.

Quick reference: Your integrated roadmap

- Ship answer‑first content with evidence on high‑intent topics.

- Instrument proxies for AI visibility and track branded search deltas.

- Target publications assistants already cite in your category.

- Harden performance and markup so your content is fetchable and citeable.

- Review quarterly: Retire what’s thin, double down on what assistants reuse.

FAQ

Q1). How do I know if AI assistants are driving demand when I can’t see all referrals?

Triangulate. Look for lifts in branded search and direct traffic that correlate with expanded AI features in your category. Sample priority questions in ChatGPT, Gemini, and Copilot. When you publish new Q&A content or fix schema, annotate the change and watch downstream movement in GA4 and GSC. Search Engine Land outlines this proxy approach well (Search Engine Land).

Q2). What kind of content is most often cited in AI answers?

Pages that state the question plainly and provide a complete, sourced answer, ideally with first‑party data. Studies show longer, explanatory queries trigger AI overviews, and exact-match keywords matter less than coverage and clarity (Search Engine Journal). Add author credentials, link to original studies, and include structured data to make attribution easy.

Q3). My top rankings lost clicks this year. What can I do now?

Optimize for answer presence, not just positions. Update your top pages with question‑led headings, short summaries, and evidence blocks that assistants can quote. Ensure performance and schema are flawless. After the changes, monitor branded search and the presence of sampled answers for your core questions (Search Engine Journal).

Q4). How should multi‑location brands adapt to AI‑driven discovery?

Standardize local data (hours, parking, neighborhoods) and ensure consistency across your site and listings. Publish city‑specific FAQs and buyer’s guides that answer nearby intent. Analyses suggest that AI can surface credible local options from neutral guides and ensure yours is the easiest to cite (Search Engine Journal).

Q5). What does “fast enough” mean for performance in an AI‑heavy world?

For key templates, aim for sub‑second TTFB and healthy Core Web Vitals on both mobile and desktop. Keep primary content server‑rendered where possible, cache at the edge, and keep a clean, consistent schema. Performance and uptime are now as much a discoverability issue as a UX concern (Forbes).

Bringing It All Together

By 2026, discovery will flow across Google, AI assistants, and social Q&A in a single, continuous motion. Winning brands will think beyond links and make their expertise usable, by people and by models. That looks like answer‑first pages backed by evidence, impeccable schema, speed, and strategic placements on sources assistants already cite. You’ll measure not just rankings, but presence in AI features and the brand lift that follows. If you’d like a partner who brings warmth, clarity, and serious chops to the work, BusySeed is here to help you turn this vision into momentum.

Let’s future‑proof your search strategy now, so your brand is the calm, trusted voice at the top of the answer, wherever customers ask.

Works Cited

“AI Tool Adoption Surges, Search Stays Strong.” Search Engine Land, 2025, https://searchengineland.com/ai-tool-adoption-surges-search-stays-strong-461235.

Drenik, Gary. “AI Search Is Reshaping Consumer Behavior and Brands Must Adapt.” Forbes, 12 June 2025, https://www.forbes.com/sites/garydrenik/2025/06/12/ai-search-is-reshaping-consumer-behavior-and-brands-must-adapt/.

Federal Reserve Bank of St. Louis. “The State of Generative AI Adoption in 2025.” On the Economy, Nov. 2025, https://www.stlouisfed.org/on-the-economy/2025/nov/state-generative-ai-adoption-2025.

Pew Research Center. “Americans’ Use of ChatGPT Is Ticking Up, but Few Trust Its Election Information.” 26 Mar. 2024, https://www.pewresearch.org/short-reads/2024/03/26/americans-use-of-chatgpt-is-ticking-up-but-few-trust-its-election-information/.

Pew Research Center. “What Web-Browsing Data Tells Us About How AI Appears Online.” 23 May 2025, https://www.pewresearch.org/data-labs/2025/05/23/what-web-browsing-data-tells-us-about-how-ai-appears-online/.

Search Engine Journal. “Google AI Overviews Trending Toward Authoritative Sites.” 2024, https://www.searchenginejournal.com/google-ai-overviews-trending-toward-authoritative-sites/540937/.

Search Engine Journal. “Google CTRs Drop 32% for Top Result After AI Overview Rollout.” 2025, https://www.searchenginejournal.com/google-ctrs-drop-32-for-top-result-after-ai-overview-rollout/551730/.

Search Engine Journal. “Study: Google AI Overviews Appear in 47% of Search Results.” 2024, https://www.searchenginejournal.com/study-google-ai-overviews-appear-in-47-of-search-results/535096/.

Search Engine Journal. “Studies Suggest How to Rank on Google’s AI Overviews.” 2024, https://www.searchenginejournal.com/studies-suggest-how-to-rank-on-googles-ai-overviews/532809/.

Search Engine Journal. “What 2025’s AI‑Driven SERP Changes Mean for Multi‑Location Brands.” 2025, https://www.searchenginejournal.com/2025-ai-serp-changes-dac-spa/553864/.

Search Engine Land. “How AI Is Reshaping SEO: Challenges, Opportunities and Brand Strategies for 2025.” 2025, https://searchengineland.com/how-ai-is-reshaping-seo-challenges-opportunities-and-brand-strategies-for-2025-456926.

Search Engine Land. “LLM Optimization: Tracking Visibility in AI Discovery.” 2025, https://searchengineland.com/llm-optimization-tracking-visibility-ai-discovery-463860.