Brand Safety & AI Ads: How Ethical Failures Kill Performance

AI-based ad placements like Meta's Advantage+ can inadvertently associate brands with unsafe or misleading content if algorithmic and data oversight is lacking. Maintaining ethical standards in content sourcing and targeting protects brand reputation and campaign effectiveness.

TL;DR

- Consumers penalize brands for unsafe adjacencies; trust and ROAS fall when ads appear near misinformation or offensive content.

- Programmatic scale plus automation magnifies risk; fraud sites and junk inventory siphon budget and data.

- Platform safety features help, but they’re not set-it-and-forget-it; human oversight and clear governance are essential.

- Treat ethics like a performance lever: define standards, wire them into workflows, and measure them as KPIs.

- If you want a partner who makes safety and ROI play nice, talk to BusySeed. We’ll build a defensible system that scales the right way.

Why does brand safety matter more in the era of AI automation?

Because consumers punish bad context, and machines scale mistakes fast. In AI ads, brand safety and ethical standards directly impact performance across every ad campaign. When a placement misfires, you don’t just risk reputation; you lose clicks, conversions, and revenue. The stakes are that simple.

Audiences notice context. In January 2024, IAS reported that 82% of U.S. consumers say it’s essential that online ads run next to “appropriate” content; 75% view brands less favorably if their ads appear beside misinformation; and 51% would stop using a brand if an ad appears near offensive content (IAS). Those numbers translate into fewer clicks, lower conversion rates, and reduced LTV; Unsuitable adjacency is a performance tax, whether you’re running AI ads across social, search, or programmatic.

The buying landscape compounds the stakes. Over 70% of digital spend is programmatic today, and 60% of agencies and brands rank brand safety among their top concerns because millions still leak into low-quality placements (WARC via MediaBrief). Put plainly: if your AI automation runs blind, you can’t optimize what you can’t trust.

How do unsafe adjacencies translate into lost performance?

Unsafe adjacencies drain ROAS by eroding trust, nullifying creative, and spiking post-click drop-off. Even a best-in-class creative will under-deliver if it’s placed next to harmful or misleading content in a live ad campaign. This is where brand safety stops being a policy and becomes profit protection across your AI ads stack.

- Lower quality traffic: Users exposed to questionable placements bounce more and convert less.

- Attribution distortion: Junk inventory manufactures false “view-through” credit, inflating channels that don’t truly contribute to revenue.

- Long-term brand damage: Consumers change behavior (e.g., stop purchasing) after unsafe exposures, future acquisition becomes harder and pricier (IAS).

The solution set is operational: context-aware controls, better inventory curation, and continuous auditing. Employing ethical standards in AI automation will safeguard performance multipliers you’re already paying for, audience data, creative testing, and bid strategies inside every ad campaign.

What makes programmatic and AI automation vulnerable to ethical failures?

Scale outruns oversight. When spending is allocated in real time across a vast inventory, AI automation makes thousands of decisions before human eyes can catch issues. That’s precisely how AI ads end up supporting questionable publishers or content and undermining brand safety and ethical standards, and why a single misclassification can ripple across every ad campaign.

- NewsGuard identified hundreds of “Unreliable AI-Generated News” (UAIN) sites intended to siphon programmatic dollars across multiple languages (Dentsu). In mid-2023, 393 programmatic ads from 141 major brands showed up on 55 UAIN domains (Newsweek).

- WARC’s research shows marketers are spending millions on low-quality placements that violate safety criteria (MediaBrief).

Ethical failures also surface inside the workflow. An IAB/Aymara survey found over 70% of marketers encountered AI automation errors (hallucinated claims, biased creatives, off-brand targeting), while only ~35% intended to strengthen governance in the next year (IAB). That gap guarantees recurring mistakes in AI ads if brand safety and human oversight aren’t baked into your ad campaign playbook.

How should teams build ethical standards into every ad campaign?

Write them down, make them measurable, and wire them into purchasing, creative, and reporting from day one. Start by codifying ethical standards and enforcing them at every ad campaign kickoff. That way, brand safety scales with your AI ads rather than playing catch-up later.

- Governance: Adopt a simple, team-readable policy that defines unacceptable placements, sensitive categories, audience protections, disclosure requirements, and escalation paths. The European Advertising Council’s 12 guideline framework highlights human oversight, labeling of AI-generated content, and safeguarding vulnerable groups (The Brussels Times).

- Controls scope: Translate policy into real platform settings and partner requirements. That means standardized inclusion lists, verified marketplaces, third-party verification, and transparent reporting.

- People and process: Assign clear owners for reviews (pre-flight checks, mid-flight audits, post-campaign debriefs) and create a fast path to pause spend when issues arise.

These aren’t compliance chores; they’re performance multipliers. Teams that consistently execute against a clear standard compound advantages, improve inventory quality, clean up data, and achieve more predictable outcomes. If you want a shortcut to a working blueprint, BusySeed can help align policy and platform across your AI ads so every ad campaign benefits.

What practical controls reduce risk without throttling scale?

Use layered defenses rather than blunt instruments. Keyword blocklists alone can backfire. Dentsu notes that banning a term like “gay” to avoid sexual content can erase LGBTQ news entirely, hurting inclusivity and wasting inventory (Dentsu). Combine AI automation with people who understand context, language nuance, and culture to protect brand safety at full scale in AI ads.

- Pre-bid brand-safety filters from third-party partners, with sensitivity tuned to your category and risk tolerance.

- Inclusion lists (always-on, human-maintained) for premium publishers; de-prioritize open exchange when your strategy doesn’t require it.

- Verification tags and measurement parity across partners for apples-to-apples comparisons.

- Platform-native tools: Google and Meta placement controls matter; turn them on and audit outcomes (Google; Social Discovery Insights).

- Context modeling rather than raw keyword blocks, with a cadence to refresh your taxonomy as news cycles and threats change.

- For content inputs, align procurement with ethical standards so your creative pipelines don’t introduce risk upstream.

These steps don’t just keep things clean; they expand reach responsibly. They’re the difference between cautious throttling and confident scale in AI ads while upholding brand safety across each ad campaign.

How do you keep data secure while centralizing it, but without compromising personalization?

Architect your stack so protection and performance reinforce each other. Protect identifiable information by design and ensure access is role-based; secure data is a growth asset, not a bottleneck. Build segments and lookalikes without moving raw files across vendors; keep secure data inside governed environments even as your AI ads evolve.

- Use clean rooms and hashed matching to connect identity while minimizing exposure. This lets you attribute and optimize without transporting raw PI, keeping data secure throughout the entire ad campaign.

- Enforce least-privilege access models across your CDP, DSP, and analytics tools so secure data access remains tightly controlled.

- Apply event-level encryption in transit and at rest, especially for high-frequency conversion streams, to protect secure data as signals increase.

- Limit vendor sprawl. Fewer hops means fewer risks and simpler compliance around secure data.

- Monitor usage and policy adherence continuously with audit logs and automated alerts to safeguard secure data throughout every ad campaign.

Most importantly, align creative and media testing with these constraints so teams don’t attempt shortcuts. Personalization doesn’t require reckless sharing when your architecture is built to responsibly harness secure data. If you need a practical way to wire ethical standards into your AI ads, our team at BusySeed will map it to your stack.

Where do AI systems go wrong, and how do we fix them?

They optimize for the wrong thing, or they act on low-integrity inputs. That’s why you see “winner” segments that perform in-platform but fail to generate incrementality. This is where brand safety and secure data discipline intersect with AI ads optimization.

- “Lowest-CPM wins”: Systems exploit cheap, low-quality supply that looks great on CTR but fails your conversion quality check.

- Content misclassification: Automation misses nuance (e.g., satire vs. misinformation) and your brand shows up where it shouldn’t, hurting brand safety.

- Creative hallucinations: Generative systems embellish claims or skew tone; over 70% of marketers have seen these errors (IAB).

Fixes that work in the wild:

- Human-in-the-loop checkpoints on surprising recommendations. If a placement, audience, or creative feels off-brand, require approval before it hits your ad campaign.

- Bias and hallucination tests embedded in creative QA. Short, repeatable prompts and negative-control phrases catch issues early.

- Offline quality gates. Post-click quality metrics and cohort LTV thresholds feed back into bidding, not just click-based signals, to keep AI ads honest.

Which KPIs and feedback loops align AI automation with ethical standards?

Make your values measurable. Shift optimization from raw clicks to outcomes you actually care about: brand suitability pass rates, verified attention, conversion quality, and cohort-level LTV. Codify brand safety as optimization constraints at the same level as performance. This keeps all AI ads decisions within guardrails across every ad campaign.

- Define thresholds for suitability, IVT rates, and publisher integrity scores.

- Feed verified events back to platforms and third-party bidders as negative or positive signals.

- Use incrementality testing to prevent systems from over-crediting low-quality touchpoints.

- Put compliance metrics on the same dashboard as ROAS so trade-offs are visible in real time.

When ethical standards and outcomes share a dashboard, your teams should stop treating policy as a tax and start treating it as a performance driver. For a turnkey approach, BusySeed can implement this feedback loop across your AI ads and secure data stack.

Which tools and partners set you up for success?

Look for partners who are transparent, enforceable, and interoperable with your stack. Avoid black boxes. Favor integrations that respect secure data and make auditing straightforward. When evaluating the best examples of ethical AI in advertising, insist on proof: logs, controls, and measurable outcomes. All of this supports brand safety and keeps your AI ads and each ad campaign on track.

- Verification and suitability partners: IAS and peers offer pre-bid and post-bid tools to enforce context and see what slipped through (IAS).

- Publisher curation: Verified private marketplaces and direct deals with reputable outlets reduce exposure to churn-and-burn supply.

- Creative tooling: Prioritize AI that includes claims checking, tone guardrails, and approval workflows, not just idea generation.

- Sourcing and training data: Choose content sourcing that aligns with ethical standards so your creatives and models don’t ingest toxic or infringing material.

If you want help sorting the signal from the noise, we do this every day at BusySeed. Our team road-tests vendors, builds control planes, and integrates reporting so your operators don’t have to guess. Start here so your next ad campaign and AI automation can be safer and stronger.

How do Google and Meta’s latest safety features change your playbook?

They set a new baseline, and you should treat them that way. Google’s 2024 Ads Safety report outlines AI-powered systems that blocked billions of policy-violating ads and suspended ~700K advertiser accounts in 2024, contributing to a ~90% reduction in impersonation-scam ad reports (Google). On Meta, expanded brand-safety and placement controls, collaboration with IAS, and options like disabling comments on ads improve advertiser control.

- Turn on the strongest defaults and verify the change in your logs. Don’t assume they’re enabled by default.

- Pair platform-native controls with independent verification so you can reconcile differences and get a fuller picture.

- Use inclusion lists even on “safe” inventory. Your risk tolerance and context needs are unique, and so are your secure data policies.

These features make it easier to protect scale in AI ads without sacrificing performance. If you implement them deliberately and keep brand safety rules visible during each ad campaign.

How does geography shape brand safety choices?

Context and regulation vary by market, so your safety controls and creative need local tuning. Linguistic nuance and cultural reference can flip the meaning of a phrase; a generic blocklist can erase essential local news or minority voices. Local publisher relationships and country-specific newsroom quality ratings can significantly improve brand safety outcomes in AI ads.

Regulatory posture matters, too. In regions with stricter privacy laws and data-residency rules, ensure your stack aligns accordingly, especially around consent, identity resolution, and storage. The right clean-room strategy and vendor contracts preserve performance while honoring local constraints on secure data.

Lastly, newsroom environments differ. Political cycles, conflicts, or natural disasters shift sensitivity. Build flexibility into your controls so you can widen or narrow adjacency thresholds by country without rebuilding your entire ad campaign playbook.

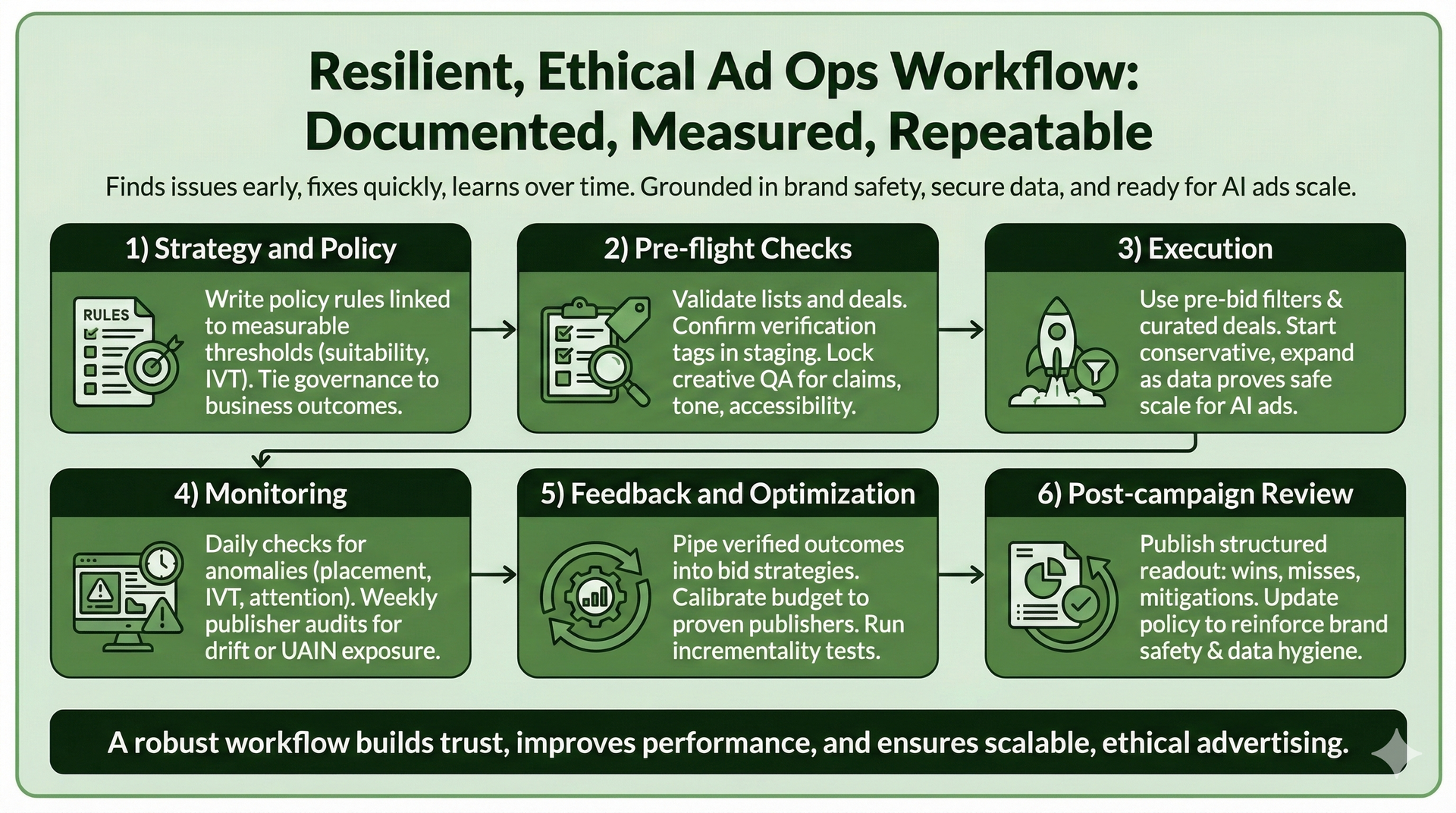

What does a resilient, ethical ad ops workflow look like?

It’s documented, measured, and repeatable. A good workflow finds issues early, fixes them quickly, and learns from them over time. Here’s a blueprint your team can adapt, grounded in brand safety, aligned to secure data best practices, and ready for AI ads scale.

- 1) Strategy and policy

Write policy rules linked to measurable thresholds (suitability levels, IVT, publisher integrity). Tie governance to business outcomes, so teams see the “why,” not just the “no.”

- 2) Pre-flight checks

Validate inclusion lists and marketplace deals. Confirm verification tags fire correctly in a staging run. Lock creative QA for claims, tone, and accessibility across each ad campaign.

- 3) Execution

Use pre-bid filters plus curated deals. Start with conservative adjacency settings and expand as data proves a safe scale for your AI ads.

- 4) Monitoring

Daily checks for anomalies: placement, IVT, suitability, attention. Weekly publisher audits to spot drift or emerging UAIN exposure (Dentsu; Newsweek).

- 5) Feedback and optimization

Pipe verified outcomes back into bid strategies. Calibrate budget toward publishers with proven post-click quality. Run incrementality tests regularly to benefit every ad campaign.

- 6) Post-campaign review

Publish a structured readout: wins, misses, mitigation steps, and updates to your policy to reinforce brand safety and secure data hygiene.

Real-world findings you can act on today

- Consumer expectations: 82% care about appropriate adjacency; 75% penalize brands near misinformation; 51% churn after offensive adjacency (IAS).

- Budget risk: 70%+ of spend is programmatic; 60% of marketers cite safety as a top concern; “millions” go to low-quality placements (MediaBrief).

- Fraud signal: 393 programmatic ads from 141 brands observed on UAIN domains (Newsweek).

- AI missteps: 70%+ of marketers report AI-related ad errors; only ~35% plan to increase governance (IAB).

- Platform defenses: Google blocked billions of bad ads and suspended ~700K accounts, cutting impersonation scam reports by ~90%; Meta expanded safety controls and third‑party integrations (Google).

- Emerging norms: European Advertising Council guidelines emphasize oversight and transparency (The Brussels Times).

FAQ: Brand safety and AI in modern advertising

Q1) What are the best AI tools for creating ads without sacrificing safety?

Favor tools with built-in claim checks, tone moderation, and human approval workflows. Look for vendor attestations on training data, audit logs for prompts/outputs, and policy hooks to help your reviewers enforce rules. Integration with verification partners for context-aware creative testing is a plus. Pilot with a small budget and a pre-defined QA checklist before scaling your AI ads across an ad campaign, and ensure secure data policies are enforced end-to-end to protect brand safety.

Q2) Which are the best platforms for ethical content sourcing when building creative libraries?

Use licensed, reputable libraries with clear provenance and rights management, and consider partners that provide authenticity signals and model cards for training data. Pair this with a procurement checklist: content licensing terms, documentation of human moderation, and takedown SLAs. Reference independent assessments and industry groups for standards. This protects brand safety, limits legal risk, and keeps AI automation compliant with ethical standards while keeping data secure.

Q3) Where can I find the best examples of ethical AI in advertising to guide my policy?

Study case studies that show measured outcomes, brand suitability pass rates, reduced IVT, and quality-of-conversion gains. The European Advertising Council’s guidelines (reported by

The Brussels Times) offer a strong baseline, while platform safety reports (e.g.,

Google) provide practical examples of enforcement. Then codify what’s relevant into your ad campaign rules so AI ads align with brand safety from day one.

Q4) How do I test whether brand safety rules are hurting scale?

Run controlled holdouts. Split a portion of the spend between a “standard safety” configuration and an “enhanced safety” profile. Compare reach, cost, attention, and post-click quality, not just CPM and CTR. If enhanced safety reduces scale, analyze which categories or publishers were cut; replace them with curated lists rather than relaxing standards globally. Keep secure data and privacy constraints intact across both arms of the test so results genuinely reflect brand safety trade-offs in AI ads.

Q5) How should I prioritize fixes if I discover unsafe placements mid-flight?

Think containment, triage, prevention. First, pause the impacted groups and increase verification sensitivity. Second, audit the specific publishers and categories to identify systemic gaps. Third, add controls (inclusion lists and refined filters) and document the incident so that the policy, settings, and team habits evolve. Reinforce secure data guardrails, ensure the current ad campaign and future AI ads inherit the changes, and log learnings in your brand safety playbook.

Ready to turn safety into a performance advantage?

You don’t have to pick between ethics and growth. With the right policy, tools, and operating rhythm, you’ll protect your brand while improving acquisition quality and long-term revenue. That’s the work we do every day, combining rigorous controls with smart optimization to your AI automation so your teams can move fast without breaking trust. If you want a partner who builds for scale, safety, and speed, let’s talk. Book a strategy session and get a practical roadmap: Start here

Works Cited

Integral Ad Science. “State of Brand Safety Research 2024.” Integral Ad Science, 2024, integralads.com.

MediaBrief Staff. “WARC’s Future of Programmatic 2024 Report.” MediaBrief, 2024, mediabrief.com.

“How Big Brands Support Unreliable AI-Generated Sites.” Newsweek, 11 Aug. 2023, newsweek.com.

Interactive Advertising Bureau (IAB). “AI Adoption Is Surging in Advertising—But Is the Industry Prepared for Responsible AI?” IAB, Aug. 2025, iab.com.

Google. “Our 2024 Ads Safety Report.” Google Blog, 2025, blog.google.

Dentsu. “Brand Safety: Things to Know in 2024.” Dentsu Global, 2024, dentsu.com.

Social Discovery Insights. “Meta Introduces Expanded Brand Safety and Ad Placement Controls.” 11 Oct. 2024, socialdiscoveryinsights.com.

The Brussels Times. “Advertising Council Launches Guidelines for Ethical Use of AI.” 2025, brusselstimes.com.